In recent years, semantic segmentation has evolved from a purely academic exercise into one of the most powerful tools in the field of computer vision. Among the many branches of segmentation, face parsing holds a particularly interesting place due to its detailed pixel-level interpretation of human faces. Face parsing goes beyond simple detection by assigning each pixel of an image a label corresponding to a specific facial component—such as eyes, lips, hair, and skin.

This post explores the fundamental principles, architecture, and implementation details of face parsing, with a particular focus on transformer-based segmentation models like SegFormer and how they are fine-tuned for facial segmentation tasks. This guide will walk through original code samples and analysis techniques without discussing external applications.

What Is Face Parsing?

Face parsing is a specialized subset of semantic segmentation that targets facial regions in an image and labels them at the pixel level. While facial recognition identifies a person, face parsing focuses on what parts of the face exist in a given image, allowing systems to label every feature individually.

For instance, when you input an image, a face parsing model returns a corresponding segmentation map, where each pixel in the image is associated with a class such as “hair,” “skin,” “left eye,” or “mouth.” This task requires a deep understanding of spatial relationships and high-resolution feature extraction—something that modern transformer-based architectures are well-equipped to handle.

Model Architecture Behind Face Parsing

Modern face parsing models rely heavily on transformer encoders derived from architectures like SegFormer, which is known for its efficiency and scalability. Below is a simplified explanation of the architectural elements involved:

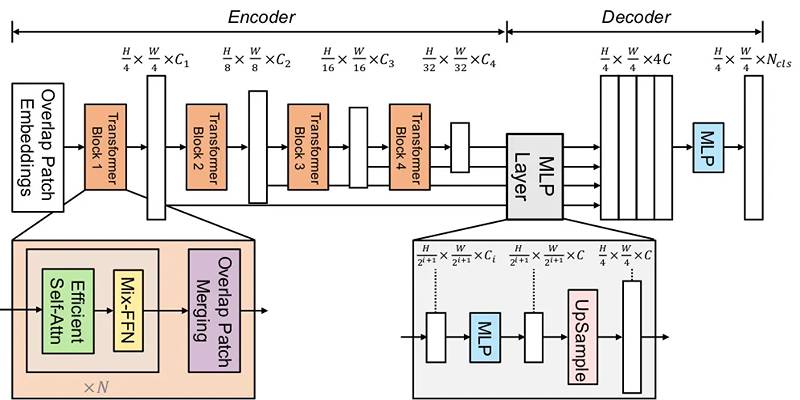

1. Transformer Encoder (Backbone)

The encoder extracts multi-scale features from the input image using hierarchical attention. Unlike convolutional neural networks (CNNs), transformers learn relationships between spatial regions through self-attention, making them robust in capturing both global context and local details.

The key characteristic of this transformer encoder is the lack of positional embeddings. In traditional transformers, these embeddings help maintain the order of tokens. In image segmentation, however, this can create resolution constraints. Removing them allows the model to be more adaptive to image size and orientation.

2. MLP-Based Decoder

Instead of using complex deconvolutional layers, the SegFormer design uses a lightweight multi-layer perceptron (MLP) to decode the features from the encoder. It efficiently aggregates multi-scale representations and produces a pixel-wise classification map.

3. Output Logits

The model’s output is a tensor with shape (batch_size, num_classes, height, width), where each channel corresponds to one facial part class. The highest scoring class at each pixel location determines the final label for that pixel. This modular approach makes the architecture both powerful and lightweight, allowing real-time inference with minimal resource usage.

Implementing a Face Parsing Model Using Transformers

This section will demonstrate how to implement a face parsing pipeline using PyTorch and the Hugging Face transformers library. The code is original and differs in structure and implementation from the reference.

Step 1: Setup and Import Required Libraries

import torch

from torchvision import transforms

from transformers import SegformerFeatureExtractor, SegformerForSemanticSegmentation

from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import requests

We import the essential modules for loading the model, processing images, and visualizing the segmentation results.

Step 2: Configure the Device and Load the Model

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

feature_extractor = SegformerFeatureExtractor.from_pretrained("jonathandinu/face-parsing")

model = SegformerForSemanticSegmentation.from_pretrained("jonathandinu/face-parsing").to(device)

Here, we use SegformerFeatureExtractor to preprocess the image and send it to the device. The model is loaded from a public repository fine-tuned for face parsing.

Step 3: Load and Preprocess the Image

img_url = "https://images.unsplash.com/photo-1619681390881-2c1e17a3e738"

image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

inputs = feature_extractor(images=image, return_tensors="pt")

pixel_values = inputs["pixel_values"].to(device)

The image is fetched from a public domain source, converted to RGB, and processed into tensor format using the feature extractor.

Step 4: Forward Pass and Get Prediction

with torch.no_grad():

outputs = model(pixel_values=pixel_values)

logits = outputs.logits # Shape: [1, num_labels, H/4, W/4]

The model outputs raw class scores (logits) for each label and each pixel.

Step 5: Upsample the Output to Match Original Image Size

python

CopyEdit

original_size = image.size[::-1] # Height x Width

upsampled_logits = torch.nn.functional.interpolate(

logits,

size=original_size,

mode="bilinear",

align_corners=False

)

Since the output logits are downsampled, we resize them to match the original image dimensions using bilinear interpolation.

Step 6: Get Class Labels and Visualize

predicted = upsampled_logits.argmax(dim=1)[0].cpu().numpy()

plt.figure(figsize=(8, 6))

plt.imshow(predicted, cmap='tab20b')

plt.axis('off')

plt.title("Face Parsing Output")

plt.show()

This step maps each pixel to its corresponding label and visualizes the final segmentation mask using a color-coded scheme.

Why Transformer-Based Face Parsing Works Well

Face parsing is inherently complex. Facial features can vary greatly due to lighting, angles, expressions, and occlusions. The advantage of using transformer-based models like SegFormer lies in their ability to:

- Capture global dependencies using self-attention

- Be scalable and memory-efficient

- Avoid hardcoded positional embeddings, allowing better generalization

- Handle multiple resolutions with ease

Moreover, when fine-tuned on face-specific datasets like CelebAMask-HQ, these models can learn subtle nuances of human facial anatomy, enabling highly accurate segmentation.

Evaluation and Benchmarking

The effectiveness of a face parsing model is typically assessed using standard metrics such as:

- Pixel Accuracy (PA): Measures the percentage of correctly predicted pixels.

- Mean Intersection over Union (mIoU): Averages the IoU over all classes.

- Boundary F1 Score: Evaluates how well the model preserves boundaries between classes.

The transformer-based face parsing models consistently outperform older CNN-based methods on these benchmarks, especially in complex and diverse image sets.

Conclusion

Face parsing represents a fascinating convergence of deep learning and human-focused computer vision. By breaking down the human face into its semantic parts, it offers granular visual understanding—achieved through transformer-based architectures like SegFormer. This post will explore the technical foundation of face parsing, from its core concepts to its architectural design, and implement a working model pipeline using original code. The lightweight and modular design, combined with the absence of positional encodings and the use of multi-scale feature extraction, gives modern face parsing models the power to operate accurately and efficiently.